Josh, I’ve been listening to loads about ‘AI-generated artwork’ and seeing an entire lot of actually insane-looking memes. What’s happening, are the machines selecting up paintbrushes now?

Not paintbrushes, no. What you’re seeing are neural networks (algorithms that supposedly mimic how our neurons sign one another) educated to generate pictures from textual content. It’s principally a number of maths.

Neural networks? Producing pictures from textual content? So, like, you plug ‘Kermit the Frog in Blade Runner’ into a pc and it spits out footage of … that?

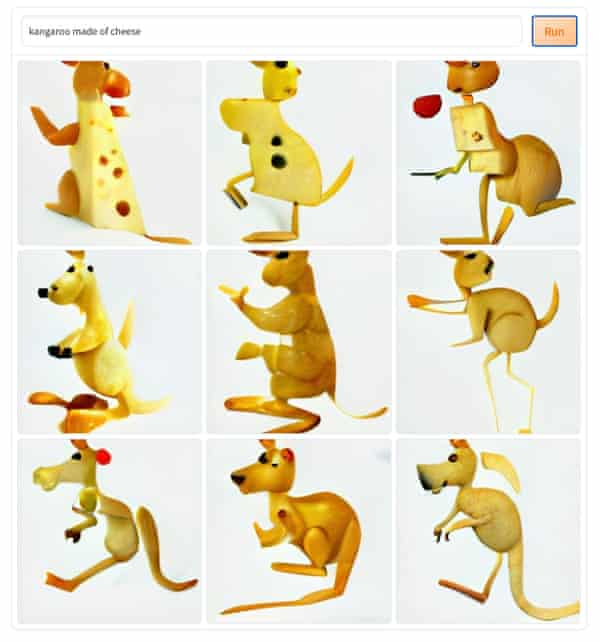

You aren’t pondering exterior the field sufficient! Positive, you'll be able to create all of the Kermit pictures you need. However the motive you’re listening to about AI artwork is due to the power to create pictures from concepts nobody has ever expressed earlier than. Should you do a Google seek for “a kangaroo made from cheese” you gained’t actually discover something. However right here’s 9 of them generated by a mannequin.

You talked about that it’s all a load of maths earlier than, however – placing it as merely as you'll be able to – how does it really work?

I’m no knowledgeable, however primarily what they’ve performed is get a pc to “look” at thousands and thousands or billions of images of cats and bridges and so forth. These are normally scraped from the web, together with the captions related to them.

The algorithms determine patterns within the pictures and captions and ultimately can begin predicting what captions and pictures go collectively. As soon as a mannequin can predict what a picture “ought to” appear like based mostly on a caption, the subsequent step is reversing it – creating solely novel pictures from new “captions”.

DALL·E mini is an AI mannequin that generates pictures from any immediate you givehttps://t.co/q8KgTWdYFHpic.twitter.com/BDqBMaO5eH

When these packages are making new pictures, is it discovering commonalities – like, all my pictures tagged ‘kangaroos’ are normally huge blocks of shapes like this, and ‘cheese’ is normally a bunch of pixels that appear like this – and simply spinning up variations on that?

It’s a bit greater than that. Should you take a look at this weblog put up from 2018 you'll be able to see how a lot bother older fashions had. When given the caption “a herd of giraffes on a ship”, it created a bunch of giraffe-coloured blobs standing in water. So the very fact we're getting recognisable kangaroos and several other sorts of cheese reveals how there was a giant leap within the algorithms’ “understanding”.

Dang. So what’s modified in order that the stuff it makes doesn’t resemble fully horrible nightmares any extra?

There’s been numerous developments in methods, in addition to the datasets that they prepare on. In 2020 an organization named OpenAi launched GPT-3 – an algorithm that is ready to generate textual content eerily near what a human might write. One of the hyped text-to-image producing algorithms, DALLE, relies on GPT-3; extra lately, Google launched Imagen, utilizing their very own textual content fashions.

These algorithms are fed large quantities of knowledge and compelled to do hundreds of “workout routines” to get higher at prediction.

‘Workout routines’? Are there nonetheless precise folks concerned, like telling the algorithms if what they’re making is correct or flawed?

Truly, that is one other huge growth. Whenever you use one in all these fashions you’re in all probability solely seeing a handful of the photographs that have been really generated. Just like how these fashions have been initially educated to foretell the perfect captions for pictures, they solely present you the photographs that greatest match the textual content you gave them. They're marking themselves.

However there’s nonetheless weaknesses on this technology course of, proper?

I can’t stress sufficient that this isn’t intelligence. The algorithms don’t “perceive” what the phrases imply or the photographs in the identical manner you or I do. It’s form of like a greatest guess based mostly on what it’s “seen” earlier than. So there’s fairly a number of limitations each in what it may do, and what it does that it in all probability shouldn’t do (resembling probably graphic imagery).

— Matt Bevan (@MatthewBevan) June 9, 2022

OK, so if the machines are making footage on request now, what number of artists will this put out of labor?

For now, these algorithms are largely restricted or expensive to make use of. I’m nonetheless on the ready record to attempt DALLE. However computing energy can also be getting cheaper, there are various enormous picture datasets, and even common persons are creating their very own fashions. Just like the one we used to create the kangaroo pictures. There’s additionally a model on-line known as Dall-E 2 mini, which is the one which persons are utilizing, exploring and sharing on-line to create all the pieces from Boris Johnson consuming a fish to kangaroos made from cheese.

I doubt anybody is aware of what is going to occur to artists. However there are nonetheless so many edge circumstances the place these fashions break down that I wouldn’t be counting on them completely.

I've a looming feeling AI generated artwork will devour the financial sustainability of being an illustrator

not as a result of artwork will probably be changed by AI as an entire - however as a result of it's going to be a lot cheaper and adequate for most individuals and companies

Are there different points with making pictures based mostly purely on pattern-matching after which marking themselves on their solutions? Any questions of bias, say, or unlucky associations?

One thing you’ll discover within the company bulletins of those fashions is they have an inclination to make use of innocuous examples. Plenty of generated pictures of animals. This speaks to one of many large points with utilizing the web to coach a sample matching algorithm – a lot of it's completely horrible.

A few years in the past a dataset of 80m pictures used to coach algorithms was taken down by MIT researchers due to “derogatory phrases as classes and offensive pictures”. One thing we’ve seen in our experiments is that “businessy” phrases appear to be related to generated pictures of males.

So proper now it’s nearly adequate for memes, and nonetheless makes bizarre nightmare pictures (particularly of faces), however not as a lot because it used to. However who is aware of in regards to the future. Thanks Josh.

Post a Comment